|

I am a third-year PhD student at the University of Washington, where I am advised by Byron Boots. I am interested in visual learning for robotics, bridging the gap between visual perception and robot planning/control. My recent research covers general robot perceptions problems in off-road navigation including traversability prediction from visual cues by self-supervision and representation learning from LiDAR and images. Mail: shjung13 [at] cs [dot] washington [dot] edu CV / LinkedIn / Google Scholar / Github |

|

|

|

|

|

|

Preprint, 2025 arXiv |

|

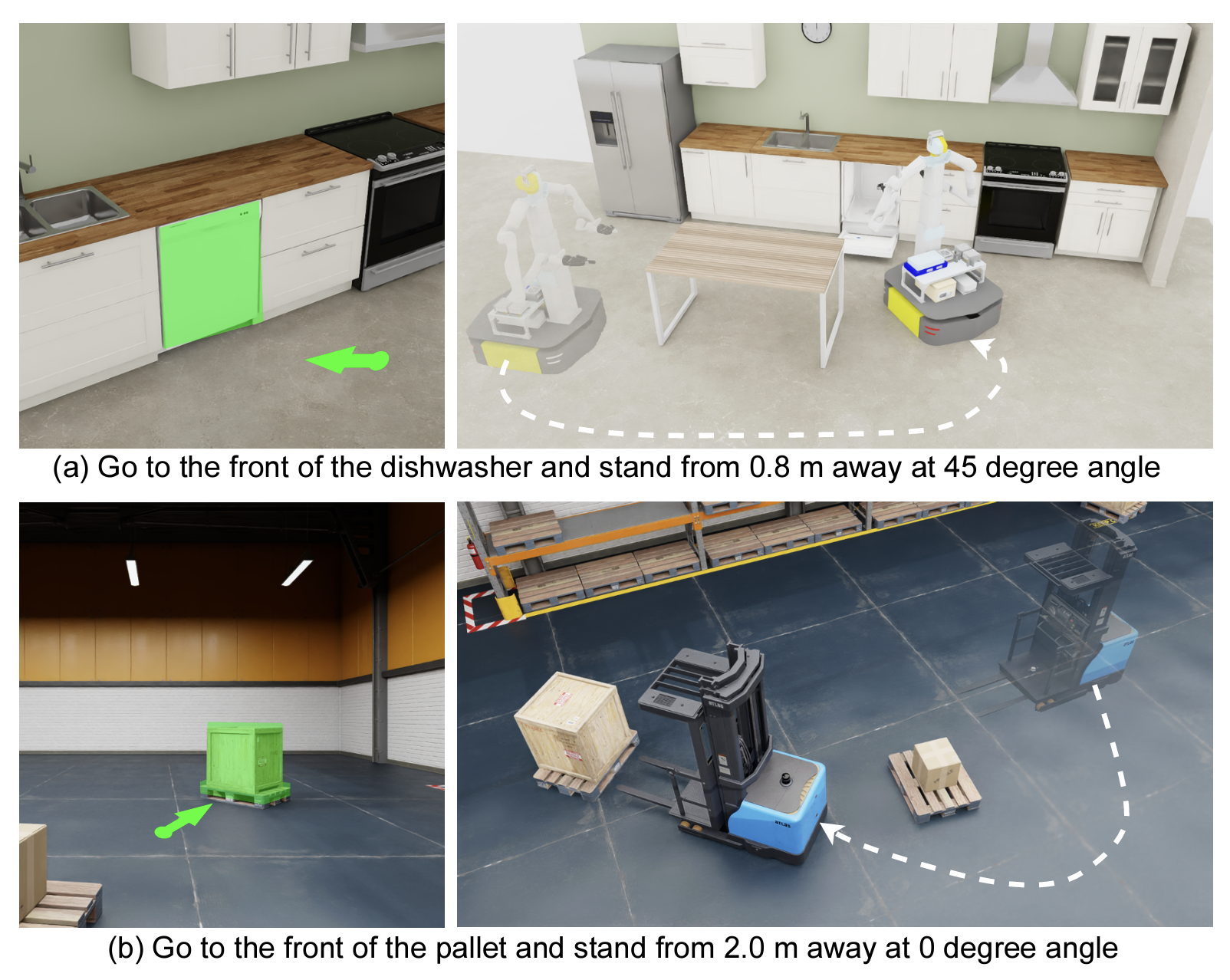

CoRL, 2025 arXiv |

|

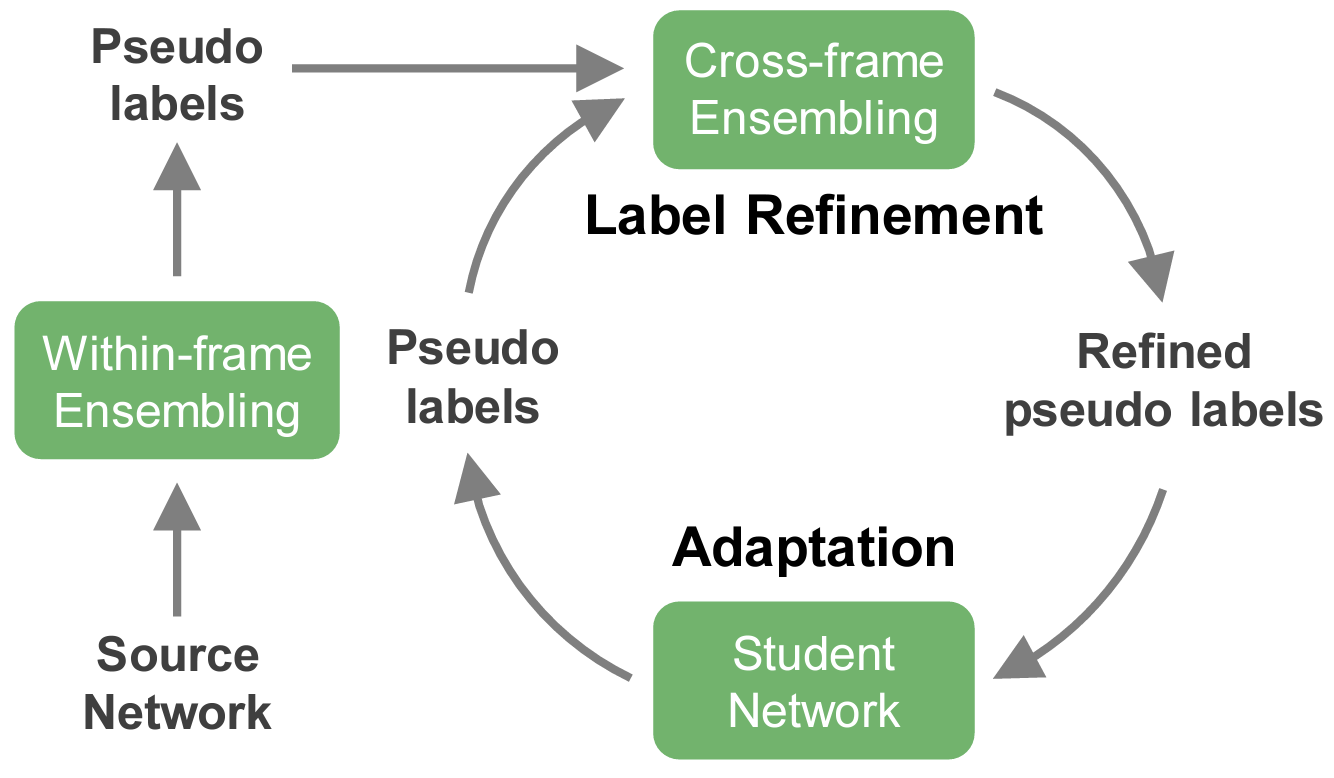

CoRL, 2025 arXiv / code |

|

ICCV, 2025 arXiv / code |

|

CVPR, 2025 paper |

|

RA-L, 2025 arXiv |

|

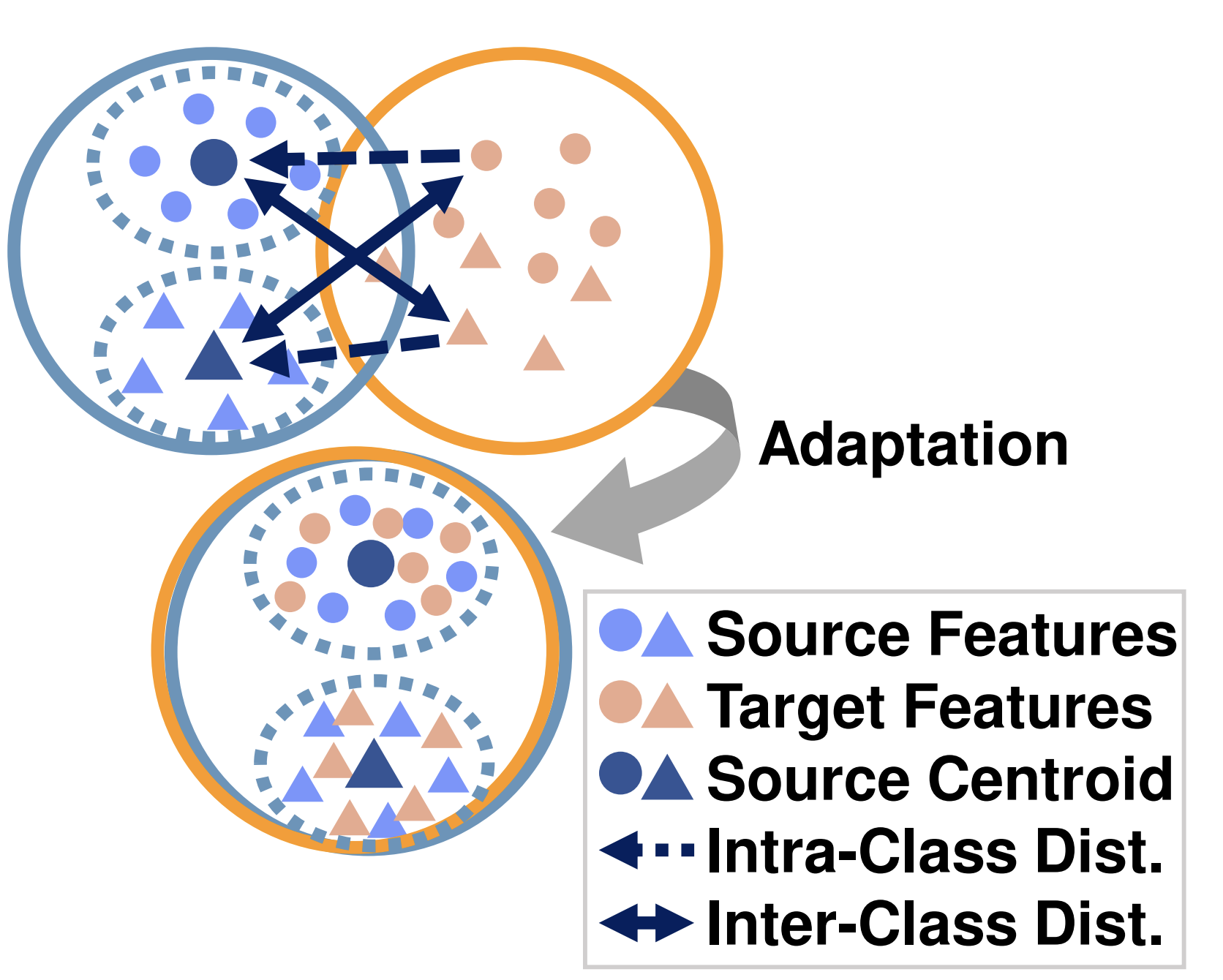

ICRA, 2024 arXiv / code |

|

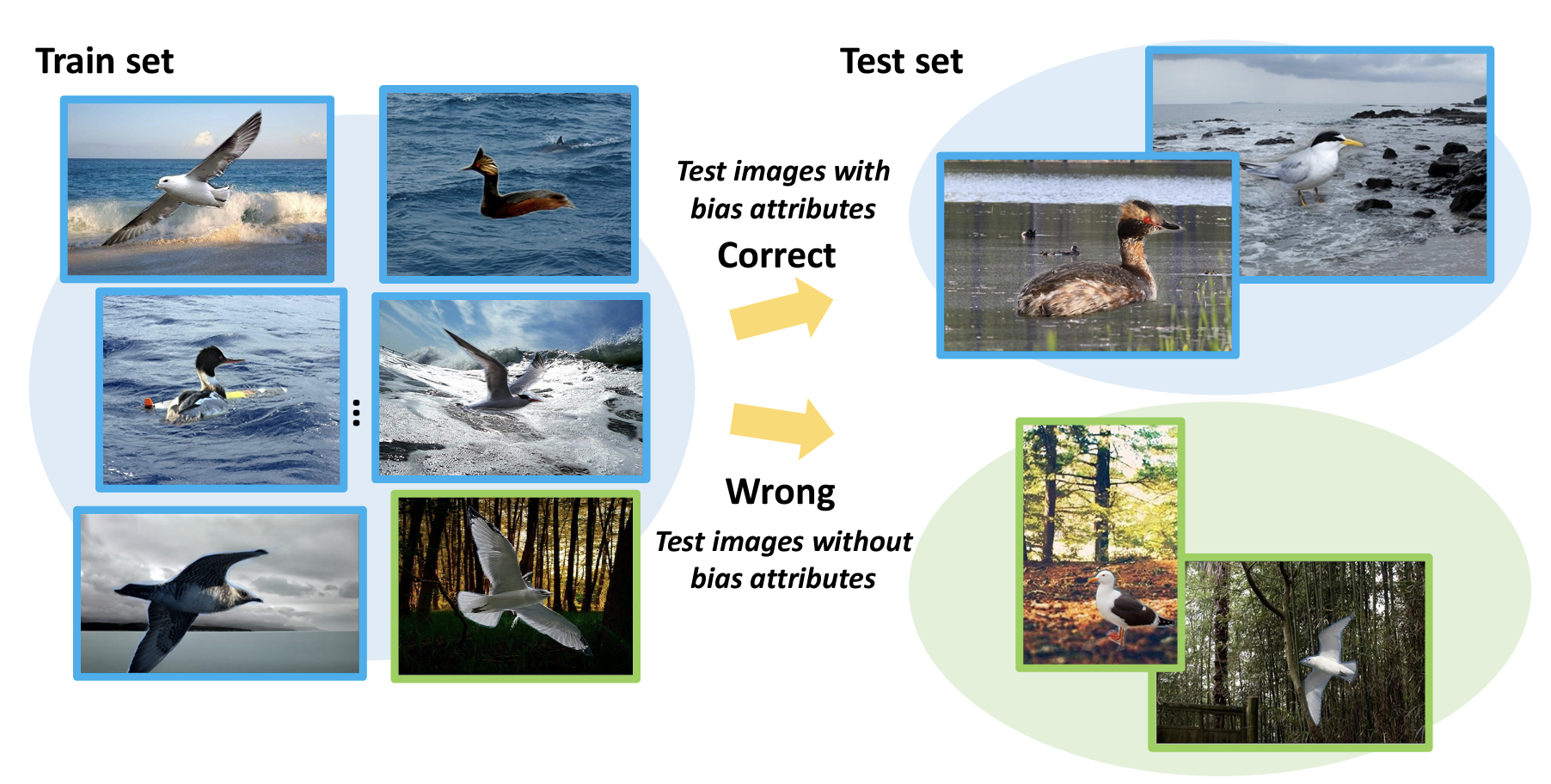

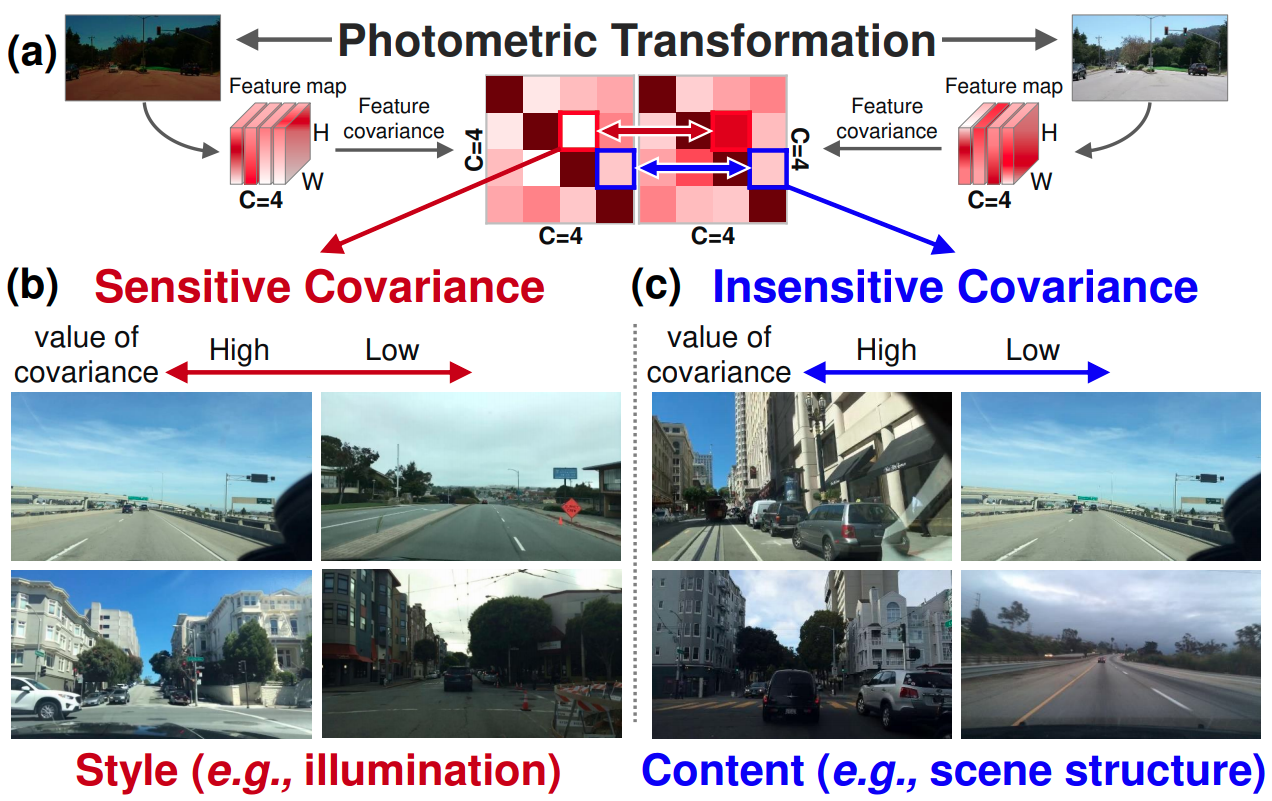

ICCV, 2023 Oral Presentation (<1.8%) arXiv / code |

|

ICCV, 2023 arXiv |

|

Preprint, 2023 arXiv |

|

WACV, 2023 arXiv |

|

Preprint, 2022 arXiv |

|

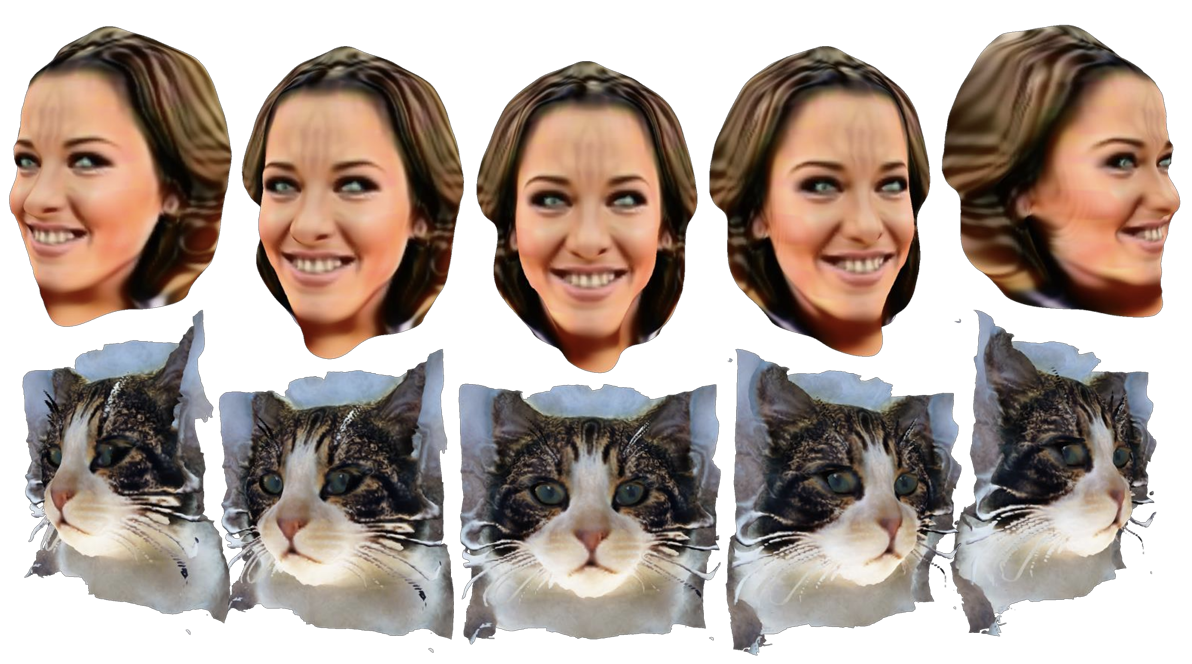

ICCV, 2021 Oral Presentation (<3.0%) arXiv / code |

|

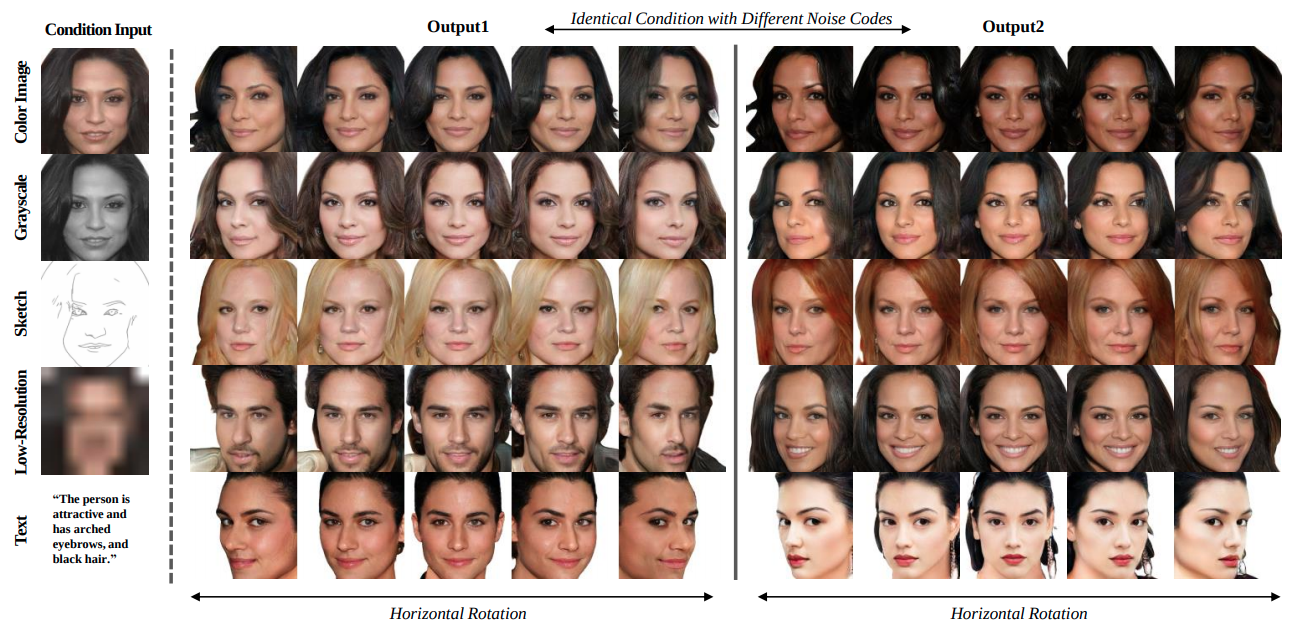

CVPR, 2021 Oral Presentation (<4.1%) arXiv / code |

|

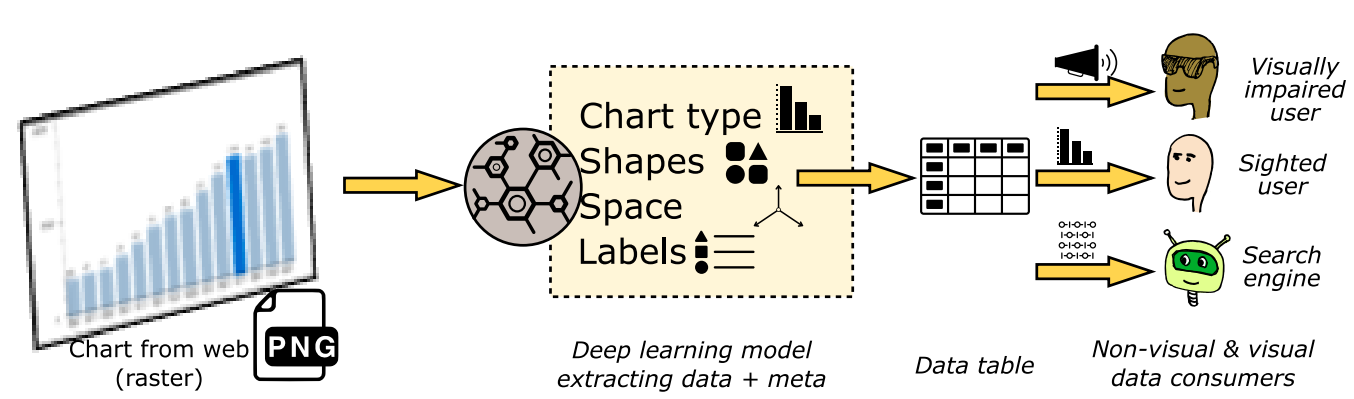

EuroVIS, 2019 paper |

|

|